Please use the rating scale below to indicate your satisfaction with this training:

☹ – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – – 😊

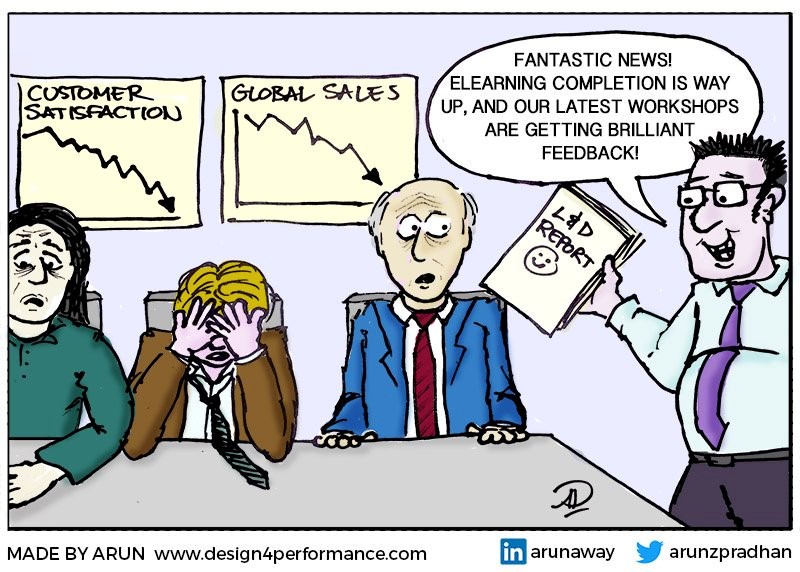

Evaluation is so much more than a questionnaire at the end of the learning product!

The purpose of evaluation is to provide information to either inform further developments of the learning materials (formative evaluation) or provide judgement on the effectiveness of the learning materials (summative evaluation). Often people think of evaluation as being the final step in the development process, but in fact, it should be considered from the outset.

When starting a project, you need to be clear about what kinds of decisions you (or other stakeholders) will need to make, the kind of evaluation information that will be required, and how this can practically be collected. Having a mindset, a skillset and a toolset for evaluation is a great start for the learning design process. That’s right, evaluation is a design starting point, not just something to consider once everything else is done.

This is where evaluation mindset comes in – if we have a design process focused from the beginning on performance improvement, then we have to consider what it will be that we can evaluate as the measure of that improvement. It’s Steven Covey’s ‘start with the end in mind’ idea applied to learning design.

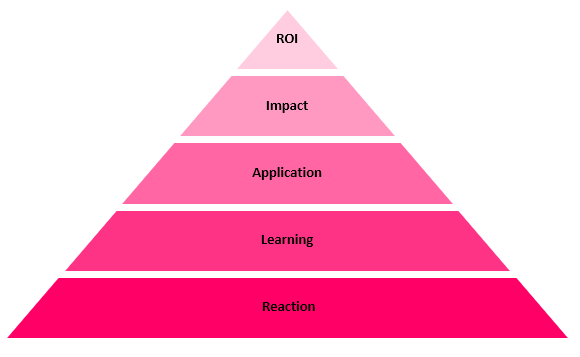

Evaluation frameworks abound. Two of the most well known are the four level Katzell-Kirkpatrick model and the Phillips ROI model. How we Learning & Development people love our pyramids! In this diagram these two models’ descriptions of the levels are blended:

The levels of these models each have an influence on the evaluation toolset and skillset choices made. Each level is progressively more challenging to evaluate.

- Did participants have a positive reaction to the training?

How people are reacting to the learning experience is important to you as the designer/facilitator, and may influence the likelihood that they will progress to the ‘higher’ levels of the models. The affective learning environment (how people feel in and about the experience) can be challenging to influence in the digital space, but you do need to at least consider this as you design – platform, graphics, colour palette, language, navigation – all influence the user experience. - Did participants learn new knowledge, skills or attitudes?

Evaluation at this level will include questions that uncover whether knowledge, skills and attitude have been improved, as well as whether the design aided learning. This may influence the content that you include, how it’s presented and the processes that you design (interactions, flow, scenarios). - Did participants apply what they had learnt to their work or life situation?

Pre-surveys of participants and their managers can provide a benchmark against which to compare post-training workplace performance. - Was there an impact to the organisation as a result of the training?

Organisational results may be evaluated through KPIs and a range of readily-available operational metrics – for example, staff attrition rates or sales figures. If the available data metrics are considered in the design process, they may influence what information is gathered in relation to the training. - Was there a positive return on investment as a result of the training?

ROI is sometimes simple to calculate where the training initiative can be isolated as the only variable in relation to performance measures. The cost of training (development, delivery, time) compared to savings/profit made as a result of the training can provide a ROI figure.

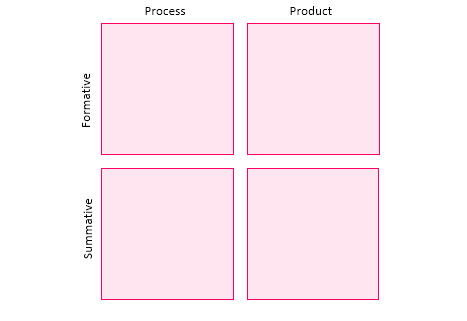

Project process evaluation

It’s not just evaluation of the learning experience that’s important. In order to stay at the top of our game, learning designers need to evaluate the project process itself. Consider this model:

Consideration of the initial/draft design in relation to proposed evaluation measures would be formative process evaluation.

Taking the time during a project for prototyping unfamiliar/risky areas and failing (and learning) fast would be formative product evaluation.

Considering the positive and negative aspects of the project once it has concluded (summative process evaluation) is an investment in future design success.

Measuring the impact of a project in terms of business outcomes and ROI would be summative product evaluation.

Which quadrant do you put most of your evaluation time/effort into? Consideration of all elements of evaluation will have a positive impact on your design processes and your learning/performance outcomes. When your mindset, skillset and toolset for evaluation are all aligned, you won’t just be designing good learning experiences, you’ll be designing in such a way that good learning experiences are inevitable. Now that’s something to 😊 about.

Interested in a deep dive?

Curious about the Katzell-Kirkpatrick reference? Will Thalheimer outlines the case in Work Learning

Here’s a great infographic on evaluation levels.

If you’re up for a Radical Rethinking of a Dangerous Art Form, this article, based on the book by Will Thalheimer will help you resdesign your ‘smile-sheets’ to be performance focused.

If you want to see learning connect to the business this article by Jack and Patti Phillips outlines how to Create an Executive-Friendly Learning Scorecard.

What will you do?

Time to evaluate your evaluation! Take a look at your last project. Use the evaluation models and map what you did against the different levels, then look at your post-project review. There’s a great infographic in the deep dive section that will help with this.

Get it touch!

Contact us at easyA to explore how our learning solutions design team can work with your organisation: www.easyauthoring.com